Using LLMs like ChatGPT to Quickly Plan Better Lessons

Tips and tricks for efficiently increasing the quality of your lesson plans with the help of AI.

[image created with Dall-E 2 via Bing’s Image Creator]

Welcome to AutomatED: the newsletter on how to teach better with tech.

Each week, I share what I have learned — and am learning — about AI and tech in the university classroom. What works, what doesn't, and why.

In this week’s edition, I explain ways I recommend using ChatGPT and other AI tools for lesson planning.

If you are teaching this fall, you either are already in the classroom or will be soon. As for me, my semester is in full swing. My preparations over the summer were less thorough than I would have liked — this happens every year, it seems — but I am getting into my groove.

Still, my preparations could not prepare me for the idiosyncrasies of my students. One crucial thing I could not foresee is how quickly I have moved and will move through course material, especially since every class session finds my students actively in dialogue with it, me, and each other.

Sometimes, students have more trouble learning something than others had in the past. Sometimes, students get more excited about a bit of content than I had expected. Sometimes, students are bored after immediately mastering something that took prior students much more time. Sometimes, small groups of students struggle to efficiently work together.

Even the best laid plans — and mine are surely not that — must be flexibly altered in light of the realities on the ground. In other cases, I have not even started lesson planning. Looking at you, November classes…

Enter large language models (LLMs). Today, I will explain how they can significantly improve your lesson planning, both in terms of quality and efficiency.

🎭 The Prompt Frame: Role Simulation

When we think about what would make us more efficient and effective teachers, we often imagine aspects of our lessons and course design from other perspectives. We imagine who it would be nice to have around or whose mind we wish we could better access or understand. This is exactly the frame we should have when we approach LLMs.

The core idea for prompting LLMs effectively for lesson planning is simulation. Imagine that you are communicating with a simulated person who takes on the role you desire them to have: an expert teaching mentor giving pedagogical advice or, perhaps, an earnest (and surprisingly pedagogically-aware) student telling you how they really feel about your course.

Like our colleagues, teaching mentors, and students, LLMs are not infallible. They cannot take into account all of the relevant factors. They make mistakes and hallucinate. They have biases and tendencies. However, they have access to a lot of the picture, especially if we supply them with background information and context, and they typically do not make significant and undetectable mistakes in the context of lesson planning. As a result, they can provide very useful guidance into how we should plan to teach.

🧰 The AI Option Space

There are a range of LLMs suitable for lesson planning, with ChatGPT being the default. In case you are unaware, there are two forms that ChatGPT takes: ChatGPT3.5 and ChatGPT4. The former is free and can be accessed after you create an account at chat.openai.com. To access the latter, one option is to make an account and then pay for a premium membership (which costs $20 per month). You can also access ChatGPT4 for free via Bing Chat with Microsoft’s Edge browser or via AI tool aggregators like Poe. Another option is to use one of ChatGPT’s competitors, like Bard or Claude 2.

ChatGPT4 is more creative, better at inference and details, and more flexible than ChatGPT3.5. It also comes with faster response speeds and “beta” features like Plugins and Advanced Data Analysis (the mode formerly known as ‘Code Interpreter’). These latter features let you upload files to analyze and also give you access to a wider range of output formats.

At present, Bard handles image uploads, generates tables that can be transferred into Google Sheets, exports Python code, and reads outputs out loud, while Claude 2 handles a range of uploads and generates simple images.

In what follows, I will explain some of the ways I use LLMs for lesson planning, as well as variations using different LLMs’ distinctive functionalities and strengths.

📃 Creating Lesson Plans

LLMs are especially good at the core task of lesson planning: namely, creating full drafts of a lesson plan for a single specific class session.

When prompting LLMs for this purpose, there are, at minimum, six criteria that your prompt should meet. Your prompt should:

Encourage the LLM to take on a role that leverages pedagogically sound practices that fit with your own pedagogical outlook, such as formative assessments or active learning techniques.

Make clear the pedagogical context of the class session, such as the nature of the course in which it is found or the surrounding sessions’ content.

Enumerate the learning objectives that you intend to achieve with this session (or the standards that you aim to meet), such as understanding of a concept or mastery of a skill.

Clarify the practical constraints that the class session will be bound by, such as the length of the class session, the number of students you expect to attend, or the capabilities of the room in which it is held.

Present as much detail on your specific vision for the session as you want, such as that you want a given objective fulfilled in a specific manner (although, perhaps, you are open to the LLM filling in all the other details).

Tell the LLM what format you want its output to take, especially if it is non-standard for the genre.

Here is an example from this semester from my own courses. In this prompt, I asked ChatGPT3.5 a simplified version of what I would ask an expert teacher colleague about the specific constraints surrounding tomorrow’s lesson — now today’s — in my (philosophy) critical thinking class:

ChatGPT3.5’s first stab

I tried this prompt multiple times. While it worked quite well some of these times, a persistent problem was that the lesson plan was too ambitious. ChatGPT3.5 often proposed a lesson plan with a mini-lecture, two whole-class activities, and two separate small group exercises. In 50 minutes, this is extremely unlikely to work well, given student interjections and tangents in the whole-class activities, issues with collaboration and alignment in the small group exercises, and delays arising from the transitions between the various components. (In general, my view is that simpler lessons are better than complex ones — you can always add twists and details later, rather than bog students down from the get-go or expect too much throughout.)

This takes me to the most important aspect of using LLMs for lesson planning: always immediately demand any revisions of their outputs that come to mind. Or, if you already have a lesson plan that you created without the help of LLMs, always see if you can quickly improve it with the help of the LLMs.

📝 Revising Lesson Plans

The cost to ask for a revision from a LLM is extremely low. It takes a minute to explain the revisions and <10 seconds for the LLM to generate a new output. (And if you already have a lesson plan you created without the help of LLMs, you can just paste it in the prompt window while flagging any of its weaknesses or ambiguities that worry you.) Further, the LLMs are very good at respecting the specific features of criticisms of their (or others’) outputs.

Tell them what you would tell a human in their shoes. What went well? What did not? Just bang it out — do not waste time carefully formatting your input. Be brief and direct. If you make a mistake, you can just correct it in the next cycle.

This can be general, loose feedback that you leave ChatGPT to interpret, like the following:

Generic feedback that gives ChatGPT3.5 freedom in revision

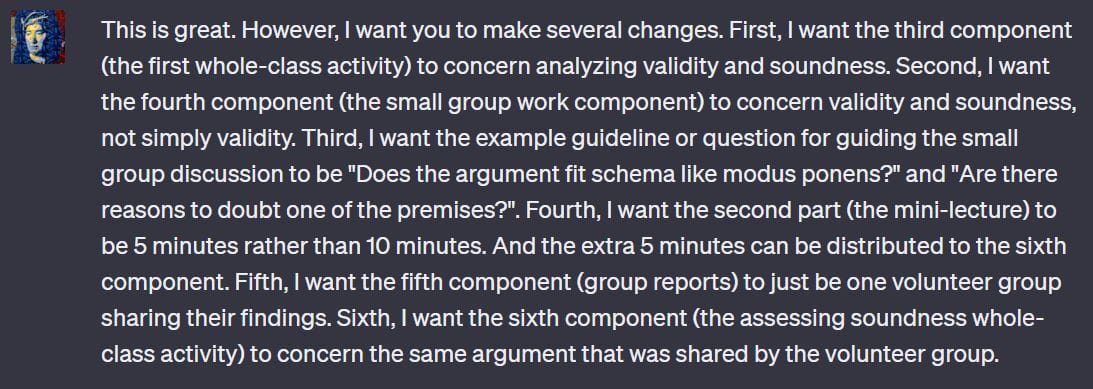

Or you can give very specific feedback, like the following:

Me trying to be almost overly precise with my concerns for ChatGPT3.5

If there are further issues, just rinse and repeat: make the same sort of demand for more revisions until you have what you want. Here was the net result of a 10-minute start-to-finish process for me:

5 minutes later and I have a good start to my lesson plan, courtesy of ChatGPT3.5

Sure, there are a few issues, like that I already have a different homework assignment planned and that step 5 refers to step 3 rather than step 4, but these are minor and I can correct them in Microsoft Word, where I can paste this initial draft for further edits.

Another angle for revisions would be to ask the LLM what a student would think of a lesson that proceeds according to such a plan. Even though the LLM might have just presented this plan as preferable, it can often identify notable (and strikingly apt) worries or concerns about it from the student’s perspective. This is an advantage over a human interlocutor who could have produced a similar plan, as humans tend to be proud of what they have already asserted or created.

Here is a prompt I gave ChatGPT3.5 to elicit such a response, and here, too, is its response:

ChatGPT3.5 gives me three constructive criticisms from the perspective of a student

Given the iterative process of revision I am recommending here, I suggest that you open a new chat for each class session that you are planning. Label each chat with the date of the class session and the course name so that you can return and continue the iteration process if need be.

In terms of comparing the various LLMs, I have found that Claude 2 is generally less ambitious than ChatGPT, which results in more simplistic or basic lesson plans that require significant supplementation. This is good for straightforward color-within-the-lines brainstorming.

Bard is tougher to wrangle than the others — especially in terms of format — but is much more creative. This is useful if you are not quite sure how you want to implement part of your lesson plan or if you have extra time for a more ambitious in-class activity. It will give you some wild ones, if you ask it to, and it will also press you harder with out-of-the-box criticisms.

Experiment with the various LLMs to see which is best to supply you with each of the components of your lesson plans. For instance, see which AI tools are best at generating sample problems or scenarios, discussion topics, assessment questions, guiding questions, or student-guided project options. You can then insert these into the overall lesson plan you are crafting, whether in a LLM or a word processor.

⬆️ Uploading Files for A Better Result

If you have access to ChatGPT4 or the other LLMs that allow file uploads (see above), there are a range of ways to use their file upload functionality to enhance this process. You can upload:

Proximate homework assignment prompts.

Relevant slides from your past lectures or presentations.

Readings that you plan to assign in the vicinity of the lesson in question.

Other lesson plans similar to what you are looking for in this case.

It is crucial that you adjust your prompts if you upload accompanying files. You need to add content to your prompts that is sensitive to the content of the files, such that the LLM has information and guidance on how you want the content of the files to modify its outputs.

For example, if you are supplying the LLM with a homework assignment that students will have completed the night before the class session you are planning, you need to tell the LLM why it is relevant. Do you want students to work in class to build on their homework submissions? How so? Be precise.

Claude 2 has file upload functionality natively, but ChatGPT4 requires you to enable it either in the form of plugins or via Advanced Data Analysis mode. To do so, click on your username in the lower left corner of your screen, select beta features, and turn on the features you want.

There are a few caveats that bear mentioning about the file upload functionality of the various LLMS:

PDFs can be troublesome. The main challenge is that their formatting — which makes them pleasing for us to read with PDF readers — makes it hard for plugins to parse their content. Then, even if they can be parsed, plugins need to index and sort their content in ways that match up with how we tend to conceptualize them and how we want to engage with them. Their length can also pose a challenge for LLMs to focus on their relevant parts.

There are tons of plugins for ChatGPT4. Tons! Try the more popular ones but don’t be afraid to tinker with others that you search for.

If ChatGPT is having trouble running its file-parsing plugins, you probably need to uninstall them via the plugin store and then reinstall them. OpenAI is working on this glitch but haven’t fixed it yet.

Claude 2 is limited to 5 files that are a maximum of 10MB each, while ChatGPT4 can handle much larger files.

Happy planning!

🔊 An Update on Our Learning Community

As many of you know already, AutomatED has a Learning Community, hosted on Circle.so, that complements the newsletter/blog.

There are two parts to the Learning Community.

First, there are the “low-stakes” parts, where members can do things like ask questions and brainstorm about AI and higher education, reflect on teaching methods that worked (or didn’t), or comment on AutomatED newsletter pieces.

Second, there are the “deep dive” parts where the AutomatED team and community members are working on developing lengthy in-depth guides (like that recently distributed via the newsletter on discouraging and preventing AI misuse), plug-and-play teaching templates, and databases of resources. We also plan to add webinars and micro-learning courses soon that are taught by domain experts from beyond the AutomatED team.

We are making some changes to the Learning Community. Starting today, the “low-stakes” parts of the community will be more easy to access. This means that no referrals and no fee will be required to join them. Only a Circle account will be required, which you can create here: https://automated.circle.so/.

The reason for the removal of our prior 2-referral requirement is that we want the Learning Community to become a vibrant and inclusive place for the free exchange of ideas for people interested in the intersection of tech/AI and higher ed. The requirement prevented the Learning Community from playing this role. For those of who you have reached out to say as much, fair enough — you were right.

In the coming weeks, we will be providing an update on accessing the “deep dive” parts, but the short story is this: there will be a paywall paired with discounts for subscribers who make newsletter referrals. It takes a lot of time, effort, and money to curate this second tier of content. By creating this system, we can curate more and better resources.

As always, to make a referral, simply click the button below or copy and paste the provided link into an email. (Remember, if you don't see the referral section below, you'll need to subscribe and/or log in first.)

If you have already made referrals, we will reach out in the coming weeks as we make these changes.